Imaging Technology on Mars Breaks New Ground

Read on the ON Semiconductor blog

While the rest of the world continues to talk about autonomous vehicles, NASA recently landed one on another planet! On February 18th, 2021, the Mars 2020 mission delivered the Perseverance rover safely to the planet’s surface. The world watched, albeit not exactly in real-time, as the rover entered the planet’s atmosphere, descended and finally landed.

That entry, descent, landing process – referred to as EDL – was entirely autonomous. It had to be because the delay between the rover sending a transmission and mission control receiving it was, at that time, around 11 minutes.

While previous missions faced the same challenge, only around 40% of all missions to Mars have landed successfully. That alone makes Perseverance a success, but what is even more astonishing is that this rover was the first mission to actively navigate during its descent. It achieved this by using a new system pioneered for the Mars 2020 mission: Terrain-Relative Navigation.

Previous missions used data collected prior to entering the planet’s atmosphere to decide where to land. This had an estimation error of as much as 3 km, which meant previous rovers have had to spend days or even weeks traveling from the landing site to the area of scientific interest. It also meant that previous landing zones were chosen not for their scientific interest but because they offered a relatively smooth landing. Trying to land a rover ‘blindfolded’ was just too difficult, the onboard technology couldn’t compensate using the sensors available. Using image sensors to provide live video of the landing, as it is happening, takes the blindfold off.

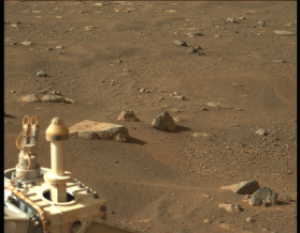

With Terrain-Relative Navigation, everything changes. It means the rover can now target more challenging landing zones because it has more control over where it actually touches down. This is how the team was able to put Perseverance in the Jezero Crater. It also means that future rovers will have even more freedom, to land where they want and not just where they can.

The rover navigated its way down to the surface by taking images of the landscape and comparing them to a library of images stored on-board. Landmarks in the images were identified and used for orientation and navigation. The EDL system could then use its known location to consult another library of mapped areas, to navigate to its destination. It could even use its location to make adjustments during descent if it decided it wasn’t where it expected to be.

This is a huge advance in Mars mission technology, and it was enabled by cameras based on image sensors that are available, as commercial off-the-shelf components. It was part of NASA’s strategy to use COTS technology.

The Perseverance mission has a total of 23 cameras, 19 of them are mounted on the rover itself and 16 of those are intended to be used by the rover while it is on the surface. The remaining seven cameras, both on the rover and the entry vehicle, were there largely to support the EDL phase of the journey. Of those seven cameras, ON Semiconductor supplied the image sensors for six of them. Our broad image sensor portfolio provides options to satisfy the requirements for various end applications. Our range of image sensors enables engineer’s flexibility in configuration to combine optimal performance characteristics including high speed, high sensitivity, low power and compact package footprint to meet certain application requirements.

The footage from some of those cameras has now been viewed millions of times. Nasa provided the footage of the EDL phase of the mission. Three of the cameras, designated Parachute Uplook Cameras (PUC), were used purely to observe the parachute as it opened on the descent. The information and insights this provided will shape future missions. The PUC cameras operated at 75 fps for around 35 seconds after the parachute was deployed, then switched to 30 fps for the remainder of the descent. Two of the other cameras, designated Rover Uplook Camera (RUC) and Rover Downlook Camera (RDC), provided similar insights and operated at 30 fps for the whole of the EDL period.

The sensors used in the PUC, RUC and RDC are the PYTHON1300, a 1.3 megapixel, ½ in CMOS image sensor with 1280 by 1024 pixels. Together, all three cameras captured over 27,000 images during the ‘seven minutes of terror’.

The sixth ON Semiconductor image sensor now residing on Mars is a PYTHON5000, a 5.3 megapixel image sensor with a 1 inch area and pixel array of 2592 by 2048 pixels. This sensor is used in the Lander Vision System and is referred to as the LCAM. This camera has a field of view of 90° by 90° and provides the input to the onboard map localization Terrain-Relative Navigation system.

It wouldn’t be accurate to say Perseverance couldn’t have landed without these cameras or the image sensors inside them. Past success has shown that it could. However, it is fair to say that the Terrain-Relative Navigation system couldn’t operate without the cameras, and that has enabled NASA to explore an entirely new area of Mars. That area may provide proof of microbial life on Mars, which is something anyone, whether they are involved with the mission at some level or not, could celebrate.

Learn more about ON Semiconductor’s portfolio of image sensors, or check out some of our latest industrial imaging solutions below.

- XGS 45000, CMOS Image Sensor, 44.7 Mp, Global Shutter

- XGS 32000, CMOS Image Sensor, 32.5 Mp, Global Shutter

- XGS 5000, CMOS Image Sensor, 5.3 MP, Global Shutter

Subscribe to the ON Semiconductor blog to learn more!